Message boards : Graphics cards (GPUs) : OpenCL and float4 performances of high end cards

| Author | Message |

|---|---|

|

Hi, 1 OpenCL platform(s) detected: Platform 0: NVIDIA Corporation NVIDIA CUDA OpenCL 1.0 CUDA 4.0.1, FULL_PROFILE 2 device(s) found supporting OpenCL: Device 0: CL_DEVICE_NAME = Tesla M2090 CL_DEVICE_VENDOR = NVIDIA Corporation CL_DEVICE_VERSION = OpenCL 1.0 CUDA CL_DRIVER_VERSION = 270.41.19 CL_DEVICE_MAX_COMPUTE_UNITS = 16 CL_DEVICE_MAX_WORK_ITEM_DIMENSIONS = 3 CL_DEVICE_MAX_WORK_ITEM_SIZES = 1024 / 1024 / 64 CL_DEVICE_MAX_WORK_GROUP_SIZE = 1024 CL_DEVICE_MAX_CLOCK_FREQUENCY = 1301 MHz CL_DEVICE_GLOBAL_MEM_SIZE = 5375 MB CL_DEVICE_ERROR_CORRECTION_SUPPORT = YES CL_DEVICE_LOCAL_MEM_SIZE = 48 kB CL_DEVICE_MAX_CONSTANT_BUFFER_SIZE = 64 kB Compiling... Starting tests... [float ] Time: 0.207866s, 1322.38 GFLOP/s [float2 ] Time: 0.414785s, 1325.40 GFLOP/s [float4 ] Time: 0.827457s, 1328.78 GFLOP/s [float8 ] Time: 1.652792s, 1330.49 GFLOP/s | |

| ID: 26850 | Rating: 0 | rate:

| |

It seems that GeForce works fast only with float2 or float4 data types whereas Fermi or Radeon 79xx are fast for all data types. I take it you mean the GeForce versions of Kepler, as opposed to K10? The prices of Tesla's are prohibitive for most, so the 690 would be the maximum choice. For double precision the K10 would be terrible as it's just 2 Kepler GK104s; 190 Gigaflops vs 665 for the M2090. For singles the official figures are 4577 Gigaflops, compared to 1331 for the M2090, but that doesn't indicate the float type. I don't know what's predominantly being used here (float/float2/float4/float8/float16), or the proportions (I expect it might vary from one task type to another), but the results might be of some interest to the researchers/dev's. Trimmed (5850) Cypress on W7x64, for 13108 Blocks: (float/float2/float4/float8/float16) (430.9/861.9/1722.8/1721.8/1438.8) The Cypress is best optimized for float4 and float8. Wouldn't run on W2K3x64, so I can't report GeForce Fermi results (GTX470). ____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 26852 | Rating: 0 | rate:

| |

It seems that GeForce works fast only with float2 or float4 data types whereas Fermi or Radeon 79xx are fast for all data types. Hi, Thanks, Exactly my point was that Kepler GeForce seems to be optimized for float2/float4. I can't get to the peak numbers using float, even with float4,8 or 16 I get max 90% of the theoretical maximum. Pre-Kepler NVIDIA GPUs don't behave like this. I know that previous Radeons are optimized for float4 due to graphics processing (pixel representation...) With 7xxx they changed the architecture. I know that Tesla K10 is quite expensive, that's why I don't want to buy it and discover the same thing. I was hoping that someone might use it here, since there are a lot of people with NVIDIA GPUs. GTX 680 and GTX590 using float (1) in CUDA:  I don't know what's predominantly being used here (float/float2/float4/float8/float16), or the proportions (I expect it might vary from one task type to another), but the results might be of some interest to the researchers/dev's. CUDA doesn't support vector operations on float2,float4, etc. Cg or pixel/vector shaders do/did. So does OpenCL. If it's CUDA, then it's definitely float and double. For other projects ATICAL can use float2 and/or float4 I suppose. If K10 performs like GTX, then 7970 or 7990 will be my choice I guess. Anyway, this is what I get with GTX680:  Radeon 6990 (one core) - overclocked to 900MHz  Radeon 5970 (one core) - overclocked to 800MHz  Tahiti - overclocked to 1000MHz, get's to 99%  | |

| ID: 26853 | Rating: 0 | rate:

| |

|

What does the x in floatx mean? The number of elements in a vector you're working on? | |

| ID: 26857 | Rating: 0 | rate:

| |

|

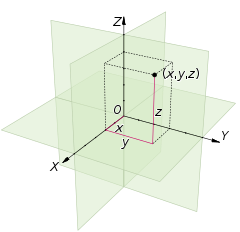

Your 'x' is for the vector data type or width, so float4 is for a 4 vector data type (.x, .y, .z, .w) or (.s0, .s1, .s2, .s3). An atoms location and mass can be described by this and a second four_vector used for movement with the last ordinate not used (-). | |

| ID: 26861 | Rating: 0 | rate:

| |

What does the x in floatx mean? The number of elements in a vector you're working on? Yes, it's the number of elements in a vector. Your 'x' is for the vector data type or width, so float4 is for a 4 vector data type (.x, .y, .z, .w) or (.s0, .s1, .s2, .s3). An atoms location and mass can be described by this and a second four_vector used for movement with the last ordinate not used (-). Actually I didn't know that there is a difference how you access them. According to the documentation it's interchangeable. But I am using this notation: float4 a; float4 b; a = [s0 s1 s2 s3] b = [s0 s1 s2 s3] Then: a * b = [a.s0 * b.s0 a.s1 * b.s1 a.s2 * b.s2 a.s3 * b.s3 ] a + b = [a.s0 + b.s0 a.s1 + b.s1 a.s2 + b.s2 a.s3 + b.s3 ]

Thanks for the clue, indeed what I get on GTX680, GTX670 and 650M is something around 60-70%. Could you please elaborate on this a little bit more (possibly send me a PM). I would like to know where this number comes from exactly and how to program to get to that 100% (I mostly code and I am not so architecture aware, but I need to be apparently to utilize that). I mean, I understand superscalar architecture, but I haven't seen any GK104 or GK110 specification where I could find that. My CUDA kernel (using floats) looks like this (it's just a simple FMAD benchmark): { .reg .s32 %r<4>; .reg .f32 %f<3>; .reg .s64 %rd<4>; .reg .f64 %fd<10243>; // demoted variable .shared .align 4 .b8 result[4096]; .loc 3 198 1 mov.u32 %r1, %tid.x; mul.wide.u32 %rd1, %r1, 4; mov.u64 %rd2, result; add.s64 %rd3, %rd2, %rd1; ld.shared.f32 %f1, [%rd3]; cvt.ftz.f64.f32 %fd1, %f1; .loc 3 204 1 fma.rn.f64 %fd2, %fd1, 0d3FF028F5C28F5C29, 0d3FF028F5C28F5C29; fma.rn.f64 %fd3, %fd2, 0d3FF028F5C28F5C29, %fd2; fma.rn.f64 %fd4, %fd3, %fd2, %fd3; fma.rn.f64 %fd5, %fd4, %fd3, %fd4; fma.rn.f64 %fd6, %fd5, %fd4, %fd5; fma.rn.f64 %fd7, %fd6, %fd5, %fd6; fma.rn.f64 %fd8, %fd7, %fd6, %fd7; ............. ............. thousands ............. fma.rn.f64 %fd10238, %fd10237, %fd10236, %fd10237; fma.rn.f64 %fd10239, %fd10238, %fd10237, %fd10238; fma.rn.f64 %fd10240, %fd10239, %fd10238, %fd10239; fma.rn.f64 %fd10241, %fd10240, %fd10239, %fd10240; .loc 3 221 1 add.f64 %fd10242, %fd10240, %fd10241; cvt.rn.ftz.f32.f64 %f2, %fd10242; st.shared.f32 [%rd3], %f2; .loc 3 222 2 ret; } I thought that there shouldn't be any bottlenecks in this case.

Sorry for posting in this thread. I wanted to create a new one, but I couldn't due to my credits or something. It is actually related to CUDA since I get the same results and the reason I am using OpenCL here is just because it can utilize float2 or float4 types. If that's better, please move the post to a separate thread.

Well, that's what I am trying to establish, just wanted to know if other people see the same numbers. I have GeForce GPUs from the same vendor, thought that it might be some hardware fault at one point. As for Tesla, from my personal experience they are slightly different compared to the GeForce model (despite being based on the same chip), at least C1060 or C/M20x0.

I have some time to decide, first I am waiting to see if any double-width 7990 comes out. NVIDIA GPUs would be preferred by me, since I am quite new to OpenCL and most of the code I have is CUDA.

Tesla K20 is going to be probably way too expensive (knowing NVIDIA's policy), even K10 is (if you're thinking about buying 4) expensive compared to GeForce GPUs. Besides I am primarily looking for SP FLOP/s.

I tried to get access to Intel MIC, but it's currently limited to certain group of people. Can't say too much about the performance. It's supposed to be around 1TF per board. I don't expect HD8xxx and/or GTX7xx before 2013 to be honest. But if they do appear, I hope that again, the top will models come first. | |

| ID: 26875 | Rating: 0 | rate:

| |

|

[s0 s1 s2 s3] is OpenCL specific. x,y,z,w is the mathematical nomenclature that was originally adopted by accelerator cards - derived from Cartesian co-ordinates I expect, | |

| ID: 26876 | Rating: 0 | rate:

| |

|

| |

| ID: 26881 | Rating: 0 | rate:

| |

|

A good introduction to the super-scalar aspect of current nVidia GPUs is written by Anandtech. Initially they talk about Fermis with compute capability 2.1 (the small ones). Here you've basically got 3 blocks of 16 shaders in each shader-multiprocessor (SM). To utilize all 3 shader blocks one would need to issue 3 instructions per clock. However, there are only 2 schedulers working on 2 independent warps. Each can dispatch 2 instructions, but the 2nd instruction can only be a shader-instruction if it's independent of the 1st instruction. fma.rn.f64 %fd4, %fd3, %fd2, %fd3; Here you write the result of the fma into %fd4 (right?). fma.rn.f64 %fd5, %fd4, %fd3, %fd4; And in order to execute this instruction, the previous result must alread be known, since %fd4 is used as an input argument here. In this case the super-scalar execution can not be used. The schedulers will issue one shader instruction each, which uses 2 of the 4 dispatch units. In the end only 32 of the 48 shader in the SM are utilized (there could also be some load/store action going on). Later on in that article it is described how the SM is extended to an SMX in Kepler, featuring 128 shaders guaranteed to be utilized and an additional 64 super-scalar shaders, all arranged in groups of 32 and shared among 4 warp schedulers, each featuring 2 dispatch units. MrS ____________ Scanning for our furry friends since Jan 2002 | |

| ID: 26885 | Rating: 0 | rate:

| |

[s0 s1 s2 s3] is OpenCL specific. x,y,z,w is the mathematical nomenclature that was originally adopted by accelerator cards - derived from Cartesian co-ordinates I expect, Yes, I used to program shaders a few years back and it was indeed like this. But according to the OpenCL's specs, it's the same here

Interesting, I actually do see the same thing happening with GTX460.

You seem to be getting more than the expected numbers. Is it overclocked? There's an issue with some cards crashing with double16 or float16, OpenCL gives out of resources error. I am not sure how to solve this yet. A good introduction to the super-scalar aspect of current nVidia GPUs is written by Anandtech. Initially they talk about Fermis with compute capability 2.1 (the small ones). Here you've basically got 3 blocks of 16 shaders in each shader-multiprocessor (SM). To utilize all 3 shader blocks one would need to issue 3 instructions per clock. However, there are only 2 schedulers working on 2 independent warps. Each can dispatch 2 instructions, but the 2nd instruction can only be a shader-instruction if it's independent of the 1st instruction. Thanks! I'll look into it. The main reason why the code to be like this is for CUDA not to compile it out (PTX compiler is quite smart and excludes unnecessary code). Therefore I need to write dependent instructions. Otherwise they would have been skipped. I haven't thought about that until now, especially that I also reach peak FLOP/s with CPUs. The scheduler theory sounds right. I will try to modify the code somehow to make it utilize Kepler fully. Edit: I modified the code, have something like this now: fma.rn.ftz.f32 %f19, %f17, %f15, %f17; fma.rn.ftz.f32 %f20, %f18, %f16, %f18; fma.rn.ftz.f32 %f21, %f19, %f17, %f19; fma.rn.ftz.f32 %f22, %f20, %f18, %f20; fma.rn.ftz.f32 %f23, %f21, %f19, %f21; fma.rn.ftz.f32 %f24, %f22, %f20, %f22; fma.rn.ftz.f32 %f25, %f23, %f21, %f23; fma.rn.ftz.f32 %f26, %f24, %f22, %f24; fma.rn.ftz.f32 %f27, %f25, %f23, %f25; fma.rn.ftz.f32 %f28, %f26, %f24, %f26; fma.rn.ftz.f32 %f29, %f27, %f25, %f27; fma.rn.ftz.f32 %f30, %f28, %f26, %f28; fma.rn.ftz.f32 %f31, %f29, %f27, %f29; fma.rn.ftz.f32 %f32, %f30, %f28, %f30; didn't help CUDA output (float32) [Device 0, GeForce GT 650M] Time: 1.496291 (ms), total FLOPs : 687194767360 [Device 0, GeForce GT 650M] Peak GFLOP/s: 691.2, Actual GFLOP/s: 459.3, 66.445% efficiency | |

| ID: 26887 | Rating: 0 | rate:

| |

Yes, it is overclocked... Shaders are working (dynamic boost) on around 1280MHz. | |

| ID: 26888 | Rating: 0 | rate:

| |

|

Regarding your modification: it looks good, but I don't know the requirements for independent instructions. There's quite a bit of latency involved until the 1st instruction completes its cycle through the pipeline (and the 2nd one can be issued), but I don't know how much. And actually the chip should be able to hide this due to many warps being in flight at any time, exploiting the "embarrassingly parallel" nature of graphics. The compiler might also have something to say about this - I think with Kepler and its static scheduling the compiler would have to classify the instructions as independent. Again, I have no idea how to verify if this is happening and what can be done to influence this. | |

| ID: 26889 | Rating: 0 | rate:

| |

Message boards : Graphics cards (GPUs) : OpenCL and float4 performances of high end cards