Message boards : Graphics cards (GPUs) : gtx680

| Author | Message |

|---|---|

|

http://en.wikipedia.org/wiki/Comparison_of_Nvidia_graphics_processing_units#GeForce_600_Series | |

| ID: 22760 | Rating: 0 | rate:

| |

|

Far too high; unless the 256 cuda cores of a GTX650 for $179 really will outperform the 1024 cuda cores of a one year old GTX590 ($669). No chance; that would kill their existing market, and you know how NVidia likes to use the letter S. | |

| ID: 22774 | Rating: 0 | rate:

| |

|

Wow, that looks totally stupid! Looks like a boy's christmas wish list. Well, before every new GPU generation you'll find a rumor for practically every posible (and impossible) configuration floating around.. | |

| ID: 22775 | Rating: 0 | rate:

| |

|

Rumors say there's a too large bug in the Kepler A1 stepping in the PCIe 3 part, which means introduction will have to wait another stepping -> maybe April. | |

| ID: 23109 | Rating: 0 | rate:

| |

|

Now there are rumours that they will be realsed on this month. | |

| ID: 23247 | Rating: 0 | rate:

| |

|

I don't expect anything from nVidia until April. | |

| ID: 23249 | Rating: 0 | rate:

| |

|

Anther article with a table of cards/chip types: | |

| ID: 23280 | Rating: 0 | rate:

| |

|

That's the same what's being posted here. Looks credible to me fore sure. Soft evolution of the current design, no more CC 2.1 style super sacalar shaders (all 32 shaders per SM). Even the expected performance compared to AMD fits. | |

| ID: 23283 | Rating: 0 | rate:

| |

|

Charlie's always good for a read on this stuff - he seems to have mellowed in his old age just lately :) | |

| ID: 23291 | Rating: 0 | rate:

| |

|

GK110 release in Q3 2012.. painful for nVidia, but quitre possible given they w´don't want to repeat Fermi and it's a huge chips. Which needs another stepping before final tests can be made (some other news 1 or 2 weeks ago). | |

| ID: 23293 | Rating: 0 | rate:

| |

|

No more CC 2.1-like issues will mean choosing a GF600 NVidia GPU to contribute towards GPUGrid will be easier; basically down to what you can afford. | |

| ID: 23294 | Rating: 0 | rate:

| |

|

They are probably going to be CC 3.0. What ever that will mean ;) | |

| ID: 23295 | Rating: 0 | rate:

| |

|

I'm concerned about the 256-bit memory path and comments such as "The net result is that shader utilization is likely to fall dramatically". Suggestions are that unless your app uses physics 'for Kepler' performances will be poor, but if they do use physics 'for Kepler' performances will be good. Of course only games sponsored by Nvidia will be physically enhanced 'for Kepler', not research apps. | |

| ID: 23298 | Rating: 0 | rate:

| |

|

If the added speed is due to some new instructions, then we might be able to take advantage of them or not. We have no idea. Memory bandwidth should not be a big problem. | |

| ID: 23304 | Rating: 0 | rate:

| |

|

GK104 is supposed to be a small chip. With 256 bit bandwidth you can easily get HD6970 performance in games without running into limitations. Try to push performance considerably higher and your shaders will run dry. That's what Charlie suggests. | |

| ID: 23319 | Rating: 0 | rate:

| |

|

Some interesting info: | |

| ID: 23386 | Rating: 0 | rate:

| |

|

http://www.brightsideofnews.com/news/2012/2/10/real-nvidia-kepler2c-gk1042c-geforce-gtx-670680-specs-leak-out.aspx | |

| ID: 23410 | Rating: 0 | rate:

| |

|

If you can only use 32 of the 48 cuda cores on 104 then you could be looking at 32 from 96 with Kepler, which woud make them no better than existing hardware. Obviously they might have made changes that allow for easier access, so we don't know that will be the case, but the ~2.4 times performance over 104 should be read as 'maximum' performance, as in 'up to'. My impression is that Kepler will generally be OK cards with some exceptional performances here and there, where physics can be used to enhance performance. I think you will have some development to do before you get much out of the card, but hey that's what you do! | |

| ID: 23416 | Rating: 0 | rate:

| |

|

no, it should be at least 64/96, but still i hope they have improved the scheduling. | |

| ID: 23419 | Rating: 0 | rate:

| |

|

32 from 96 would mean going 3-way superscalar. They may be green, but they're not mad ;) | |

| ID: 23427 | Rating: 0 | rate:

| |

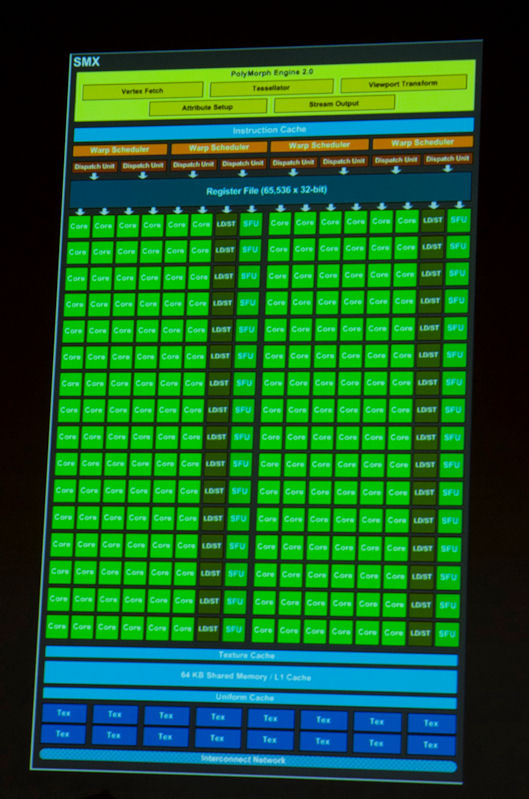

I wouldn't be surprised if they processes each of the 32 threads/warps/pixels/whatever in a wave front in one clock, rather than 2 times 16 in 2 clocks. That's what it seems from the diagram, they have 32 load/store units now. | |

| ID: 23441 | Rating: 0 | rate:

| |

|

They've got the basic parameters of the HD7970 totally wrong, although it's been officially introduced 2 months ago. Performance is also wrong: it should be ~30% faster than HD6970 in games, but they're saying 10%. They could argue that their benchmark is not what you'd typically get in games.. but then what else is it? | |

| ID: 23572 | Rating: 0 | rate:

| |

|

It seems that we are close | |

| ID: 23781 | Rating: 0 | rate:

| |

|

More news. | |

| ID: 23835 | Rating: 0 | rate:

| |

|

More rumours ... Guru3D article: | |

| ID: 23914 | Rating: 0 | rate:

| |

|

Alledged pics of a 680... | |

| ID: 23961 | Rating: 0 | rate:

| |

|

Better pictures, benchmarks and specifications. | |

| ID: 23973 | Rating: 0 | rate:

| |

|

I still have a bad feeling about the 1536 CUDA cores.... | |

| ID: 23974 | Rating: 0 | rate:

| |

|

What sort of "bad feeling"? | |

| ID: 23976 | Rating: 0 | rate:

| |

|

I had the same sort of "bad feeling" - these cuda cores are not what they use to be, and the route to using them is different. Some things could be much faster if PhysX can be used, but if not who knows. | |

| ID: 23977 | Rating: 0 | rate:

| |

|

I wouldn't worry about it. I'm pretty sure the 6xx cards will be great. If they're not, you can always buy more 5xx cards at plummeting prices. There's really no losing here I think. | |

| ID: 23979 | Rating: 0 | rate:

| |

|

Well at the very least they are seem to be like the fermi gpus with 48 cores per multiprocessor which we know that have a comparative poor performance. | |

| ID: 23980 | Rating: 0 | rate:

| |

|

There's a price going to be paid for increasing the shader count by a factor of 3 while even lowering TDP. 28 nm alone is by far not enough for this. | |

| ID: 23982 | Rating: 0 | rate:

| |

|

Suggested price is $549, and suggested 'paper' launch date is 22nd March. | |

| ID: 23988 | Rating: 0 | rate:

| |

What sort of "bad feeling"? I have two things on my mind: 1. The GTX 680 looks to me more like an improved GTX 560 than an improved GTX 580. If the GTX 560's bottleneck is present in the GTX 680, then GPUGrid could utilize only the 2/3rd of its shaders (i.e. 1024 from 1536) 2. It could mean that the Tesla and Quadro series will be improved GTX 580s, and we won't have an improved GTX 580 in the GeForce product line. | |

| ID: 23994 | Rating: 0 | rate:

| |

|

Hi, I am most strange is the following relationship: | |

| ID: 23996 | Rating: 0 | rate:

| |

|

That's because Kepler fundamentally changes the shader design. How is not exactly clear yet. | |

| ID: 23999 | Rating: 0 | rate:

| |

That's because Kepler fundamentally changes the shader design. How is not exactly clear yet. I know, but none of the rumors comfort me. I remember how much was expected of the GTX 460-560 line, and they are actually great for games, but not so good at GPUGrid. I'm afraid that nVidia want to separate their gaming product line from the professional product line even more than before. I'd like to upgrade my GTX 590, because it's too noisy, but I'm not sure it will worth it. We'll see it in a few months. | |

| ID: 24002 | Rating: 0 | rate:

| |

|

They cannot afford to separate gaming and computing. The chips will still need to be the same for economy of scale and there is a higher and higher interest in computing within games. | |

| ID: 24003 | Rating: 0 | rate:

| |

|

Well.. even if they perform "only" like a Fermi CC 2.0 with 1024 or even 768 Shaders: that would still be great, considering they accomplish it with just 3.5 billion transistors instead of 3.2 billion for 512 CC 2.0 shaders. That's significant progress anyway. | |

| ID: 24007 | Rating: 0 | rate:

| |

Well.. even if they perform "only" like a Fermi CC 2.0 with 1024 or even 768 Shaders: that would still be great, considering they accomplish it with just 3.5 billion transistors instead of 3.2 billion for 512 CC 2.0 shaders. Thats significant progress anyway.S Agreed! Don't fotget power consumtion too. I want a chip not a stove! Industry will never make a huge jump. They have to put in value the research investment. It's always more profitable two small steps than a big one. ____________ HOW TO - Full installation Ubuntu 11.10 | |

| ID: 24008 | Rating: 0 | rate:

| |

|

Otherwise people will be disappointed the next you "only" make a medium step.. ;) | |

| ID: 24009 | Rating: 0 | rate:

| |

|

Pre-Order Site in Holland - 500 Euros | |

| ID: 24015 | Rating: 0 | rate:

| |

|

I was thinking about what could be the architectural bottleneck, which results the under utilization of the CUDA cores in the CC2.1 product line. | |

| ID: 24018 | Rating: 0 | rate:

| |

|

I wouldn't expect things to work straight out of the box this time. I concur with Zoltan on the potential accessibility issue, or worsening of. I'm also concerned about potential loss of cuda core function; what did NVidia strip out of the shaders? Then there is a much speculated reliance of PhysX and potential movement onto the GPU of some functionality. So, looks like app development might keep Gianni away from mischief for some time :) | |

| ID: 24024 | Rating: 0 | rate:

| |

|

The problem with CC 2.1 cards should have been the superscalar arrangement. It was nicely written down by Anandtech here. In short: one SM in CC 2.0 cards works on 2 warps in parallel. Each of these can issue on instruction per cycle for 16 "threads"/pixels/values. With CC 2.1 the design changed: there are still 2 warps with 16 threads each, but both can issue 2 instruction per clock if the next instruction is not dependent on the result of the current one. | |

| ID: 24028 | Rating: 0 | rate:

| |

|

680 SLI 3DMark 11 benchmarks (Guru3D via a VrZone benching session) | |

| ID: 24034 | Rating: 0 | rate:

| |

The problem with CC 2.1 cards should have been the superscalar arrangement. It was nicely written down by Anandtech here. In short: one SM in CC 2.0 cards works on 2 warps in parallel. Each of these can issue on instruction per cycle for 16 "threads"/pixels/values. With CC 2.1 the design changed: there are still 2 warps with 16 threads each, but both can issue 2 instruction per clock if the next instruction is not dependent on the result of the current one. The Anandtech's article you've linked was quite enlightening. I missed to compare the number of warp schedulers in my previous post. Since then I've find a much better figure of the two architectures. Comparison of the CC2.1 and CC2.0 architecture:  Based on that Anandtech article, and the picture of the GTX 680's SMX I've concluded that it will be superscalar as well. There are twice as many dispatch units as warp schedulers, while in the CC2.0 architecture their number is equal. There are 4 warp schedulers for 12 CUDA cores in the GTX 680's SMX so at the moment I think GPUGrid could utilize only the 2/3 of its shaders (1024 of 1536), just like of the CC2.1 cards (there are 2 warp schedulers for 6 cuda cores), unless nVidia built some miraculous component in the warp schedulers. In addition, based on the transistor count I think the GTX 680's FP64 capabilities (which is irrelevant at GPUGrid) will be reduced or perhaps omitted. | |

| ID: 24045 | Rating: 0 | rate:

| |

They cannot afford to separate gaming and computing. The chips will still need to be the same for economy of scale and there is a higher and higher interest in computing within games. I remember the events before the release of the Fermi architecture: nVidia showed different double precision simulations running much faster in real time on Fermi than on GT200b. I haven't seen anything like that this time. Furthermore there is no mention of ECC at all in the rumors of GTX 680. It looks to me that this time nVidia is going to release their flagship gaming product before the professional one. I don't think they simplified the professional line that much. What if they release a slightly modified GF110 made on 28nm lithography as their professional product line? (efficiency is much more important in the professional product line than peak chip performance - of course it would be faster than the GF110 based Teslas) | |

| ID: 24046 | Rating: 0 | rate:

| |

|

Glad to hear it was the right information for you :) | |

| ID: 24047 | Rating: 0 | rate:

| |

|

Looks like some guys over at XS managed to catch tom's hardware with their pants down. Apparently, tom's briefly exposed some 680 performance graphs on their site and XS member Olivon was able to scrape them before access was removed. Quote from Olivon: An old habit from Tom's Hardware. Important is to be quick LOL! Anyways, the graphs that stand out:    Other relevant (for our purposes) graphs:   | |

| ID: 24058 | Rating: 0 | rate:

| |

|

Release date is supposed to be today! | |

| ID: 24075 | Rating: 0 | rate:

| |

|

2304 is another fancy number, regarding the powers of 2. | |

| ID: 24076 | Rating: 0 | rate:

| |

|

Interesting and tantalizing numbers. Can't wait to see how they perform. | |

| ID: 24077 | Rating: 0 | rate:

| |

|

it appears that they are actually available for real at least in the UK. | |

| ID: 24078 | Rating: 0 | rate:

| |

|

nvidia-geforce-gtx-680-review by Ryan Smith of AnandTech. | |

| ID: 24079 | Rating: 0 | rate:

| |

|

Here in Hungary I can see in stock only the Asus GTX680-2GD5 for 165100HUF, that's 562.5€, or £468.3 (including 27% VAT in Hungary) | |

| ID: 24081 | Rating: 0 | rate:

| |

|

So its compute power has actually decreased significantly from the GTX 580?! The Bulldozer fiasco continues. What a disappointing year for computer hardware. | |

| ID: 24082 | Rating: 0 | rate:

| |

|

It's build for gaming, and that's what it does best. We'll have to wait a few more months for their new compute monster (GK110). | |

| ID: 24083 | Rating: 0 | rate:

| |

|

So far we have no idea of how the performance will be here. | |

| ID: 24084 | Rating: 0 | rate:

| |

|

$499.99 in USA :( | |

| ID: 24085 | Rating: 0 | rate:

| |

|

Summarizing the reviews: gaming performance is like we have expected it, computing performace still not known, since folding isn't working on the GTX680 yet, probably the GPUGrid client won't work either without some optimization. | |

| ID: 24086 | Rating: 0 | rate:

| |

|

http://www.tomshardware.com/reviews/geforce-gtx-680-review-benchmark,3161-14.html Moreover, Nvidia limits 64-bit double-precision math to 1/24 of single-precision, protecting its more compute-oriented cards from being displaced by purpose-built gamer boards. The result is that GeForce GTX 680 underperforms GeForce GTX 590, 580 and to a much direr degree, the three competing boards from AMD. Does GPUGRID use 64-bit double-precision math? ____________ Reno, NV Team: SETI.USA | |

| ID: 24087 | Rating: 0 | rate:

| |

|

If somebody can run here on a gtx680, let us know. | |

| ID: 24088 | Rating: 0 | rate:

| |

|

Almost nothing, this should not matter. http://www.tomshardware.com/reviews/geforce-gtx-680-review-benchmark,3161-14.html | |

| ID: 24089 | Rating: 0 | rate:

| |

If somebody can run here on a gtx680, let us know. Should be getting an EVGA version tomorrow morning here in UK - cost me £405. Already been asked to do some other Boinc tests first though. | |

| ID: 24091 | Rating: 0 | rate:

| |

|

It would be nice if you also reported here what you find for other projects - thanks! | |

| ID: 24092 | Rating: 0 | rate:

| |

|

I wonder how this tweaked architecture will perform with these BOINC projects. | |

| ID: 24093 | Rating: 0 | rate:

| |

|

We have a small one, good enough for testing. The code works on Windows with some bugs. We are assessing the performance. | |

| ID: 24094 | Rating: 0 | rate:

| |

|

It would seem NVidia have stopped support for XP; there are no XP drivers for the GTX 680! | |

| ID: 24096 | Rating: 0 | rate:

| |

|

OK have now got my 680 and started by running some standard gpu tasks for Seti. | |

| ID: 24099 | Rating: 0 | rate:

| |

|

Tried to download and run 2 x GPUGRID tasks but both crashed out before completing the download saying acemd.win2382 had stopped responding. | |

| ID: 24101 | Rating: 0 | rate:

| |

|

Just reset the graphics card back to "normal" ie. no overclock and still errors out - this time it did finish downloading but crashed out as soon as it started on the work unit so looks like this project does not yet work on the 680 ? | |

| ID: 24102 | Rating: 0 | rate:

| |

|

stderr_txt: # Using device 0 We couldn't be having a 31-bit overflow on that memory size, could we? | |

| ID: 24106 | Rating: 0 | rate:

| |

stderr_txt: In English please? | |

| ID: 24107 | Rating: 0 | rate:

| |

The GPUGRID application doesn't support the GTX680 yet. We'll have test units soon and - if there are no problems - we'll update over the weekend or early next week. English - GPUGrid's applications don't yet support the GTX680. MJH is working on an app and might get one ready soon; over the weekend or early next week. PS. Your SETI runs show the card has some promise ~16% faster @stock than a GTX580 (244W TDP). Or 30% faster overclocked. Not sure that will be possible here, but you'll know fairly soon. Even if the GTX680 (195W TDP) just matches the GTX580 the performance/power gain might be note worthy; ~125% performance/Watt, or 45% at 116% performance of a GTX580. ____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 24109 | Rating: 0 | rate:

| |

|

Thanks for that simple to understand reply :) | |

| ID: 24110 | Rating: 0 | rate:

| |

|

Good idea; no point returning lots of failed tasks! | |

| ID: 24111 | Rating: 0 | rate:

| |

|

HPCwire - NVIDIA Launches first Kepler GPUs at gamers; HPC version waiting in the wings. | |

| ID: 24114 | Rating: 0 | rate:

| |

|

Why would there be cooling issues in Linux? I keep my 560Ti448Core very cool by manually setting the fan speed in the nvidia settings application, after setting "Coolbits" to "5" in the xorg.conf. It would seem NVidia have stopped support for XP; there are no XP drivers for the GTX 680! | |

| ID: 24115 | Rating: 0 | rate:

| |

|

Well, if we make the rather speculative presumption that a GF680 would work with Coolbits straight out of the box, then yes we can cool a card on Linux, but AFAIK it only works for one GPU and not for overclocking/downclocking. I think Coolbits was more useful in the distant past, but perhaps it will still work for GF600's. | |

| ID: 24116 | Rating: 0 | rate:

| |

|

It appears there may be another usage of the term "Coolbits" (unfortunately) for some old software. The one I was referring to is part of the nvidia Linux driver, and is set within the Device section of the xorg.conf. | |

| ID: 24117 | Rating: 0 | rate:

| |

|

Thanks Mowskwoz, we have taken this thread a bit off target, so I might move our fan control on linux posts to a linux thread later. I will look into NVidia CoolBits again. | |

| ID: 24118 | Rating: 0 | rate:

| |

|

Wow, this 680 monster seems to run with Handbrakes on, poor performance on CL, more worst than 580 and of corse HD79x0. | |

| ID: 24120 | Rating: 0 | rate:

| |

|

No, that seems to be the case. OpenCL performance is poor at best, although in the single non-OpenCL bench I saw it performed decently. Not great, but at least better than the 580. Double precision performance is abysmal, it looks like ATI will be holding onto that crown for the forseeable future. I will be curious to see exactly what these projects can get out of the card, but so far it's not all that inspiring on the compute end of things. | |

| ID: 24121 | Rating: 0 | rate:

| |

|

For the 1.8 GHz LN2 was neccessary. That's extreme and usually yields clock speds ~25% higher than achievable with water cooling. Reportedly the voltage was only 1.2 V, which sounds unbelievable. | |

| ID: 24129 | Rating: 0 | rate:

| |

We have a small one, good enough for testing. The code works on Windows with some bugs. We are assessing the performance. That's pretty good news. I'm glad that AMD managed to put out three different cores that are GCN based. The cheaper cards still have most if not all of the compute capabilities of the HD 7970. Hopefully there will be a testing app soon and I'll be one of the first in line. ;) | |

| ID: 24153 | Rating: 0 | rate:

| |

|

Okay so this thread has been all over the place.. can someone sum up? | |

| ID: 24155 | Rating: 0 | rate:

| |

|

They're testing today. | |

| ID: 24156 | Rating: 0 | rate:

| |

|

Hello: The summary of what I've read several analyzes on the performance of GTX680 in caculation is as follows: | |

| ID: 24172 | Rating: 0 | rate:

| |

|

Hey where's that from? Is there more of the good stuff? Did Anandtech update their launch article? | |

| ID: 24180 | Rating: 0 | rate:

| |

|

Yes, looks like Ryan added some more info to the article. He tends to do this - it's good reporting, makes their reviews worth revisiting. | |

| ID: 24185 | Rating: 0 | rate:

| |

|

Why does nvidia caps his 6xx series in this way? when they think it kills there own tesla series cards....why they still sold them when they perform that bad in comparsion to the modern desktop cards??? It would much cheaper for us and nvdia would sold much more of there desktop cards to grid computing...or they set an example of 8 of uncensored gtx680 chips on one tesla card for that price a tesla costs.. | |

| ID: 24186 | Rating: 0 | rate:

| |

Why does nvidia caps his 6xx series in this way? when they think it kills there own tesla series cards.... plain simple? they wanted gaming performance and sacrificed computing capabilities which are not needed there. why they still sold them when they perform that bad in comparsion to the modern desktop cards??? It would much cheaper for us and nvdia would sold much more of there desktop cards to grid computing...or they set an example of 8 of uncensored gtx680 chips on one tesla card for that price a tesla costs.. GK-104 is not censored! it's plain simple a mostly pure 32-bit desgin. i bet they will come up with something completey different for kepler cards. | |

| ID: 24187 | Rating: 0 | rate:

| |

|

Some of us expected this divergence in the GeForce. | |

| ID: 24188 | Rating: 0 | rate:

| |

|

ok i read ya both answers and understood, i only read anywhere that it is cut in performance for not matching there tesla. Seems to be a wrong article then ^^ (dont ask where i read that, dont know anymore). So i beleave now the gtx680 is a still good card then ;) | |

| ID: 24189 | Rating: 0 | rate:

| |

So i beleave now the gtx680 is a still good card then ;) well, it is - if you know what you get. taken from the CUDA_C guide in CUDA 4.2.6 beta: CC 2.0 compared to CC 3.0 OP's per clock-cycle and SM/SMX: 32-bit floating-point: 32 : 192 64-bit floating-point: 16 : 8 32-bit integer add: 32 : 168 32-bit integershift, compare : 16: 8 logical operations: 32: 136 32-bit integer : 16 : 32 ..... + optimal warp-size seems to have moved up from 32 to 64 now! it's totally different and the apps need to be optimized to take advantage of that. | |

| ID: 24191 | Rating: 0 | rate:

| |

|

Another bit to add regarding FP64 performance: apparently GK104 uses 8 dedicated hardware units for this, in addition to the regular 192 shaders per SMX. So they actually spent more transistors to provide a little FP64 capability (for development or sparse usage). | |

| ID: 24192 | Rating: 0 | rate:

| |

Message boards : Graphics cards (GPUs) : gtx680